Four Easy Ways You Possibly can Turn Deepseek Into Success

페이지 정보

본문

Depending on how much VRAM you might have on your machine, you may be capable of benefit from Ollama’s ability to run a number of fashions and handle multiple concurrent requests by using DeepSeek Coder 6.7B for autocomplete and Llama three 8B for chat. Reinforcement Learning: The mannequin utilizes a extra subtle reinforcement studying strategy, including Group Relative Policy Optimization (GRPO), which uses suggestions from compilers and test cases, and a learned reward model to fantastic-tune the Coder. Assuming you have a chat model set up already (e.g. Codestral, Llama 3), you can keep this whole experience local thanks to embeddings with Ollama and LanceDB. Deepseek coder - Can it code in React? The 236B DeepSeek coder V2 runs at 25 toks/sec on a single M2 Ultra. Although DeepSeek has achieved vital success in a short time, the company is primarily targeted on analysis and has no detailed plans for commercialisation within the near future, according to Forbes. In case your machine can’t handle both at the identical time, then strive every of them and decide whether you desire a neighborhood autocomplete or a local chat expertise. You may then use a remotely hosted or SaaS mannequin for the other experience.

As of the now, Codestral is our present favorite mannequin able to both autocomplete and chat. What's behind Free DeepSeek Chat-Coder-V2, making it so special to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? Their preliminary try and beat the benchmarks led them to create fashions that were quite mundane, similar to many others. Yet, despite supposedly lower improvement and usage prices, and decrease-quality microchips the outcomes of DeepSeek’s fashions have skyrocketed it to the highest place in the App Store. 4.6 out of 5. And this is an Productivity , if you like Productivity App then this is for you. Transformer architecture: At its core, DeepSeek-V2 makes use of the Transformer structure, which processes text by splitting it into smaller tokens (like phrases or subwords) after which uses layers of computations to grasp the relationships between these tokens. High throughput: DeepSeek V2 achieves a throughput that's 5.76 instances greater than DeepSeek 67B. So it’s able to producing textual content at over 50,000 tokens per second on normal hardware.

As of the now, Codestral is our present favorite mannequin able to both autocomplete and chat. What's behind Free DeepSeek Chat-Coder-V2, making it so special to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? Their preliminary try and beat the benchmarks led them to create fashions that were quite mundane, similar to many others. Yet, despite supposedly lower improvement and usage prices, and decrease-quality microchips the outcomes of DeepSeek’s fashions have skyrocketed it to the highest place in the App Store. 4.6 out of 5. And this is an Productivity , if you like Productivity App then this is for you. Transformer architecture: At its core, DeepSeek-V2 makes use of the Transformer structure, which processes text by splitting it into smaller tokens (like phrases or subwords) after which uses layers of computations to grasp the relationships between these tokens. High throughput: DeepSeek V2 achieves a throughput that's 5.76 instances greater than DeepSeek 67B. So it’s able to producing textual content at over 50,000 tokens per second on normal hardware.

Since the tip of 2022, it has actually develop into normal for me to make use of an LLM like ChatGPT for coding duties. This model demonstrates how LLMs have improved for programming duties. Alexandr Wang, CEO of ScaleAI, which offers coaching knowledge to AI models of main players such as OpenAI and Google, described DeepSeek's product as "an earth-shattering model" in a speech on the World Economic Forum (WEF) in Davos final week. The bigger mannequin is more highly effective, and its structure relies on DeepSeek's MoE approach with 21 billion "lively" parameters. More concretely, DeepSeek's R1 model is priced at $2.19 per million output tokens while OpenAI's o1 is $60 per million output tokens, making OpenAI’s model approximately 27 times more expensive than DeepSeek’s. This appears intuitively inefficient: the mannequin ought to assume extra if it’s making a tougher prediction and less if it’s making an easier one. His language is a bit technical, and there isn’t an incredible shorter quote to take from that paragraph, so it could be easier simply to assume that he agrees with me. Massive activations in giant language models. Combination of those innovations helps DeepSeek-V2 obtain special options that make it even more aggressive among different open models than previous variations.

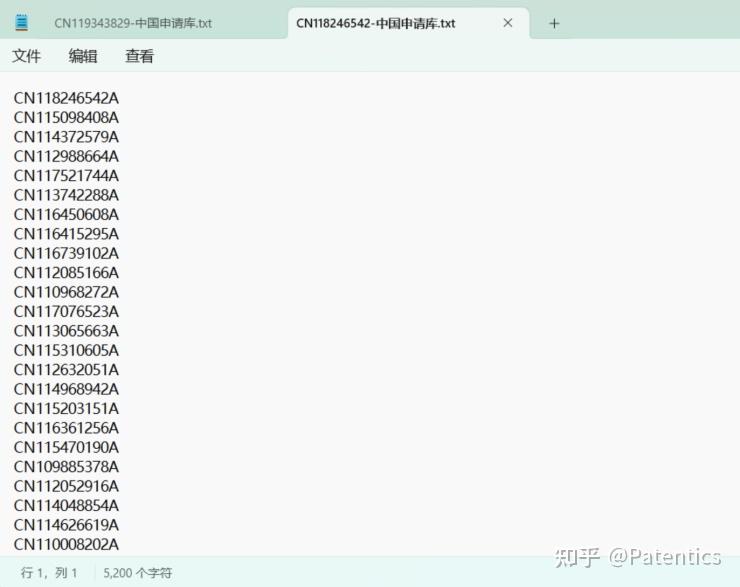

This makes it more efficient as a result of it would not waste sources on unnecessary computations. Training requires important computational assets because of the vast dataset. Training information: In comparison with the original DeepSeek-Coder, DeepSeek-Coder-V2 expanded the coaching data considerably by including an extra 6 trillion tokens, increasing the overall to 10.2 trillion tokens. Free DeepSeek-Coder-V2, costing 20-50x instances lower than other fashions, represents a big improve over the unique DeepSeek-Coder, with more intensive training information, larger and more efficient models, enhanced context handling, and advanced strategies like Fill-In-The-Middle and Reinforcement Learning. The preferred, DeepSeek-Coder-V2, remains at the top in coding tasks and could be run with Ollama, making it notably enticing for indie builders and coders. This leads to better alignment with human preferences in coding duties. Continue enables you to easily create your personal coding assistant straight inside Visual Studio Code and JetBrains with open-supply LLMs. When mixed with the code that you just ultimately commit, it can be utilized to enhance the LLM that you or your workforce use (in case you enable). This means V2 can better perceive and manage extensive codebases. In case you are just beginning your journey with AI, you can read my complete guide about utilizing ChatGPT for novices.

If you have any concerns concerning the place and how to use Deepseek AI Online chat, you can get hold of us at our web site.

- 이전글Five Gotogel Lessons Learned From Professionals 25.02.24

- 다음글The 10 Most Scariest Things About Toto Macau 25.02.24

댓글목록

등록된 댓글이 없습니다.